2024

Sanji Yang

CONTROL: Uncanny Valley Show

Unit 3

Summer Show

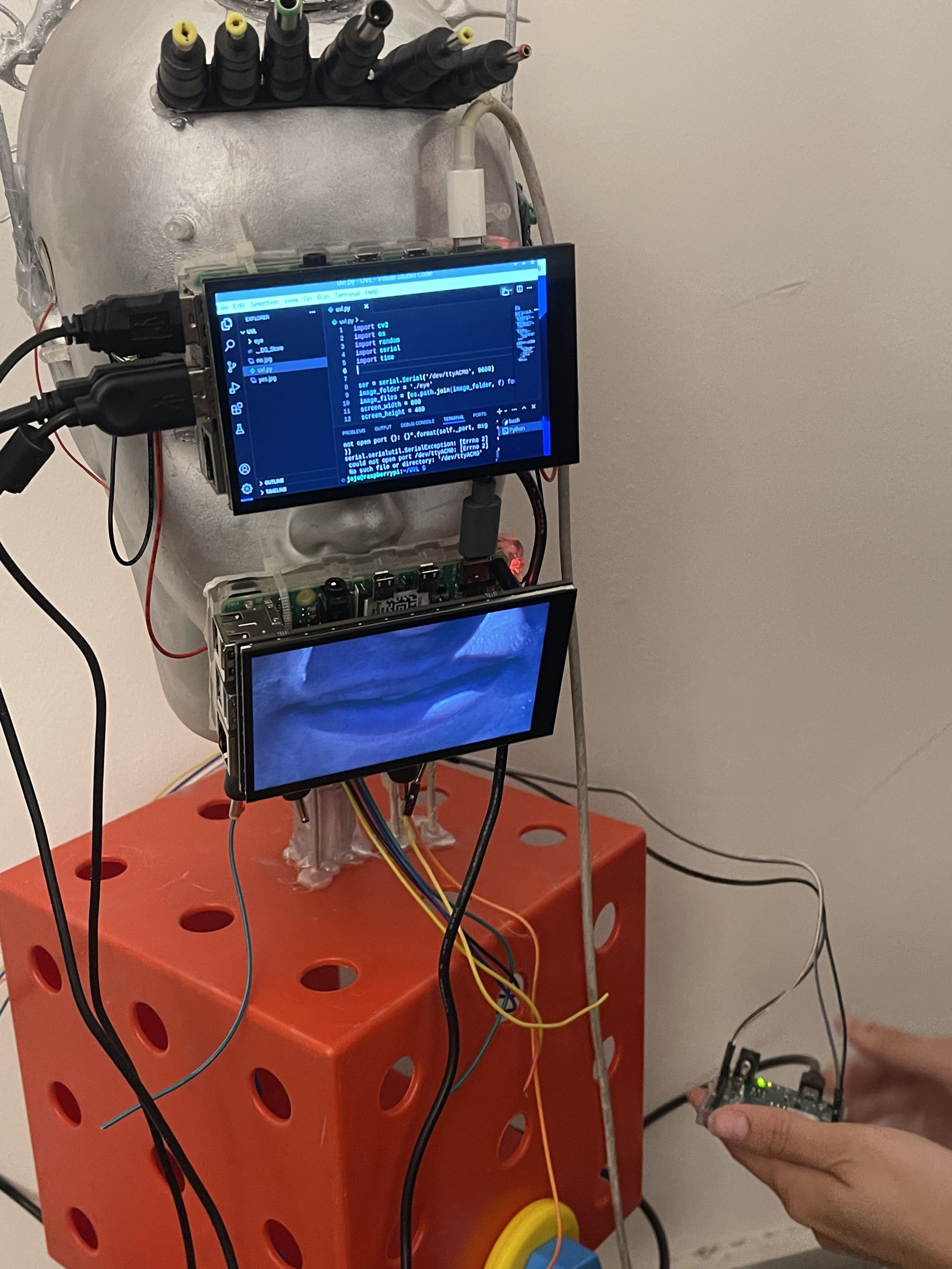

In the Unit 3 work, I tried to cooperate with JOJO, a classmate from CCI. JOJO was responsible for the software part of the installation, and I was responsible for the hardware construction part of the installation.

We changed the drawings twice in total. We followed the advice of our instructor and, considering the feasibility and safety of the installation canceled the original plan of a head-mounted wearable installation and changed it to an interactive installation placed on the ground.

During the preparation stage of the installation, we did a lot of preliminary testing work for the hardware and software of the installation to ensure its normal operation.

Some work photos of me and JOJO ↑→

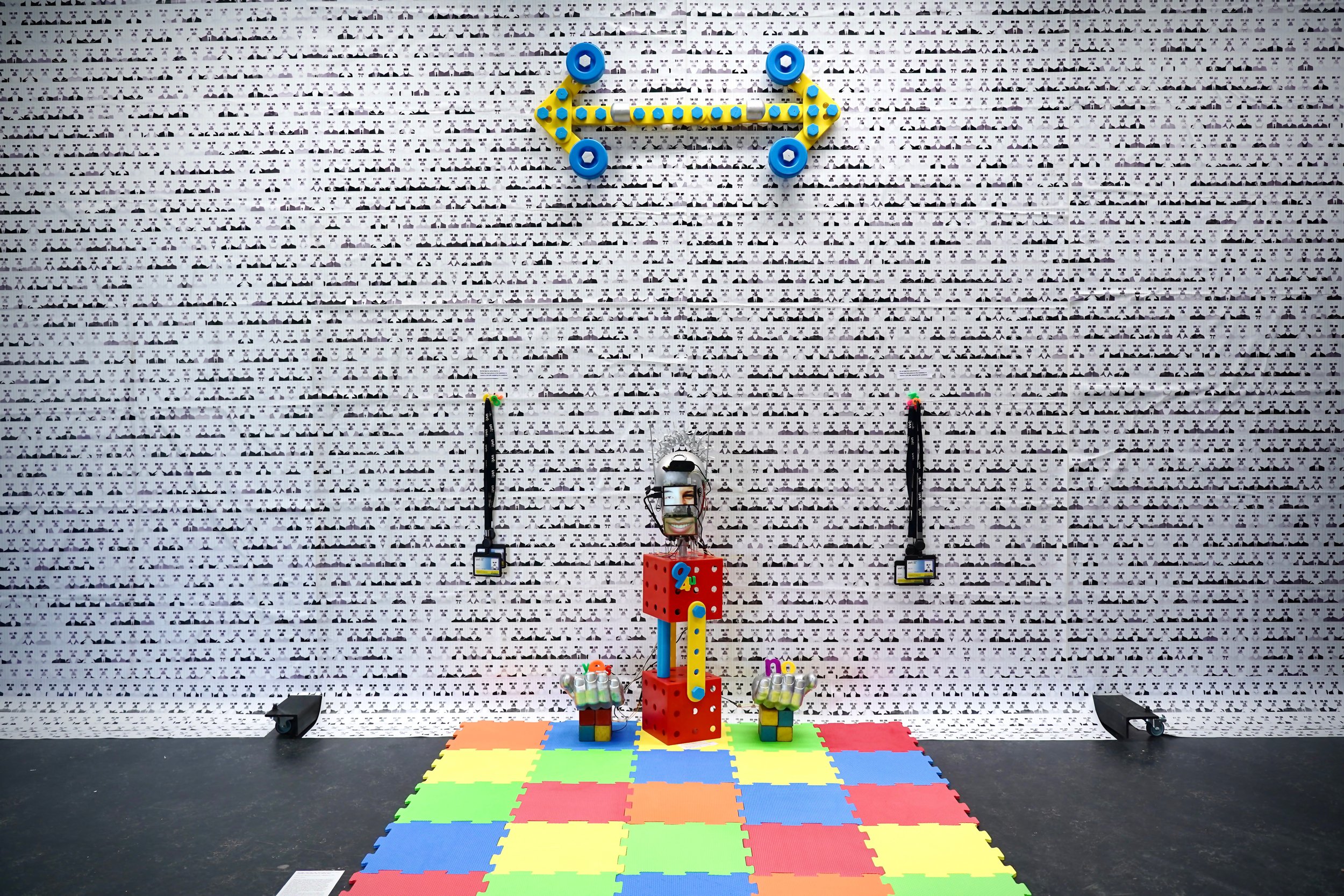

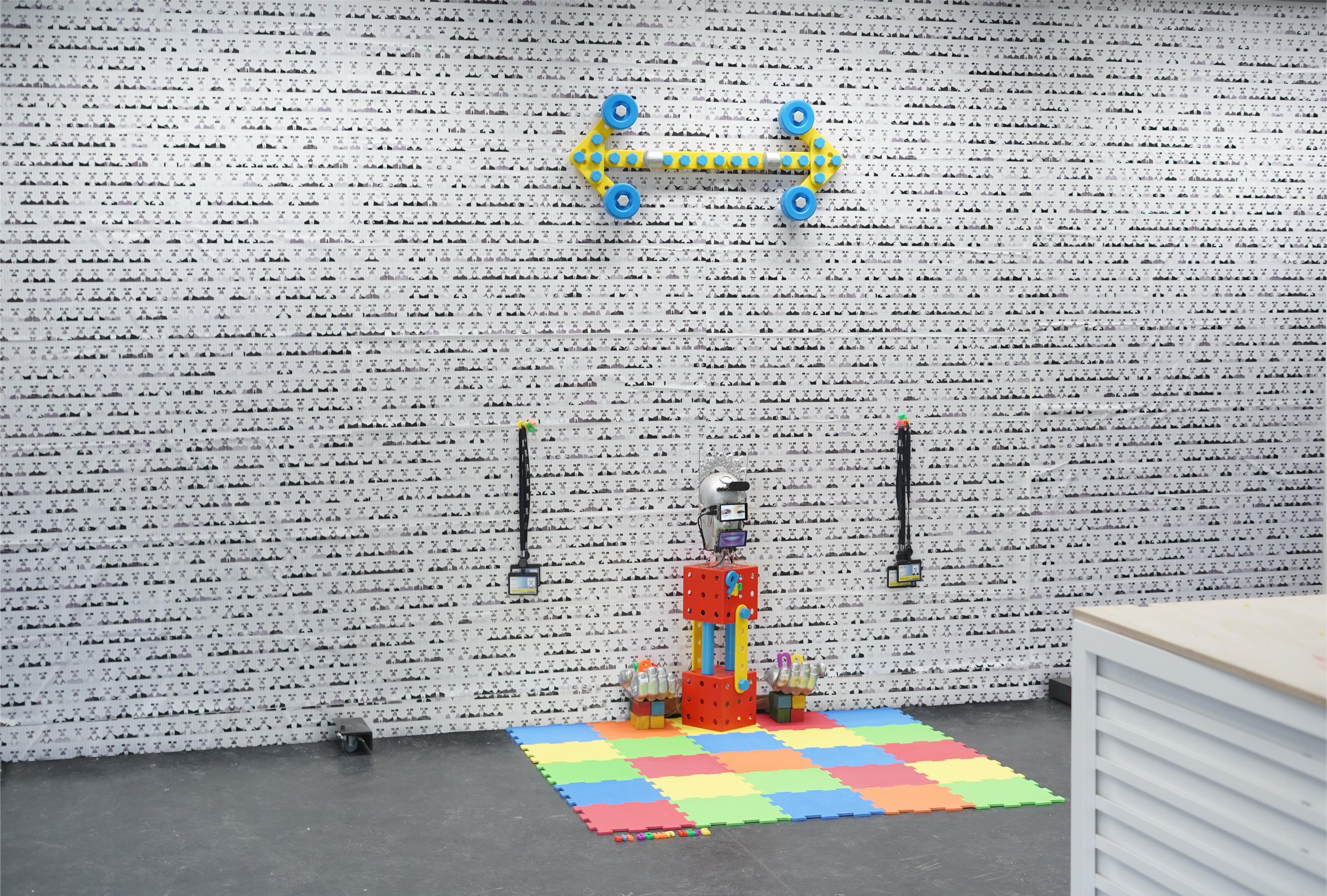

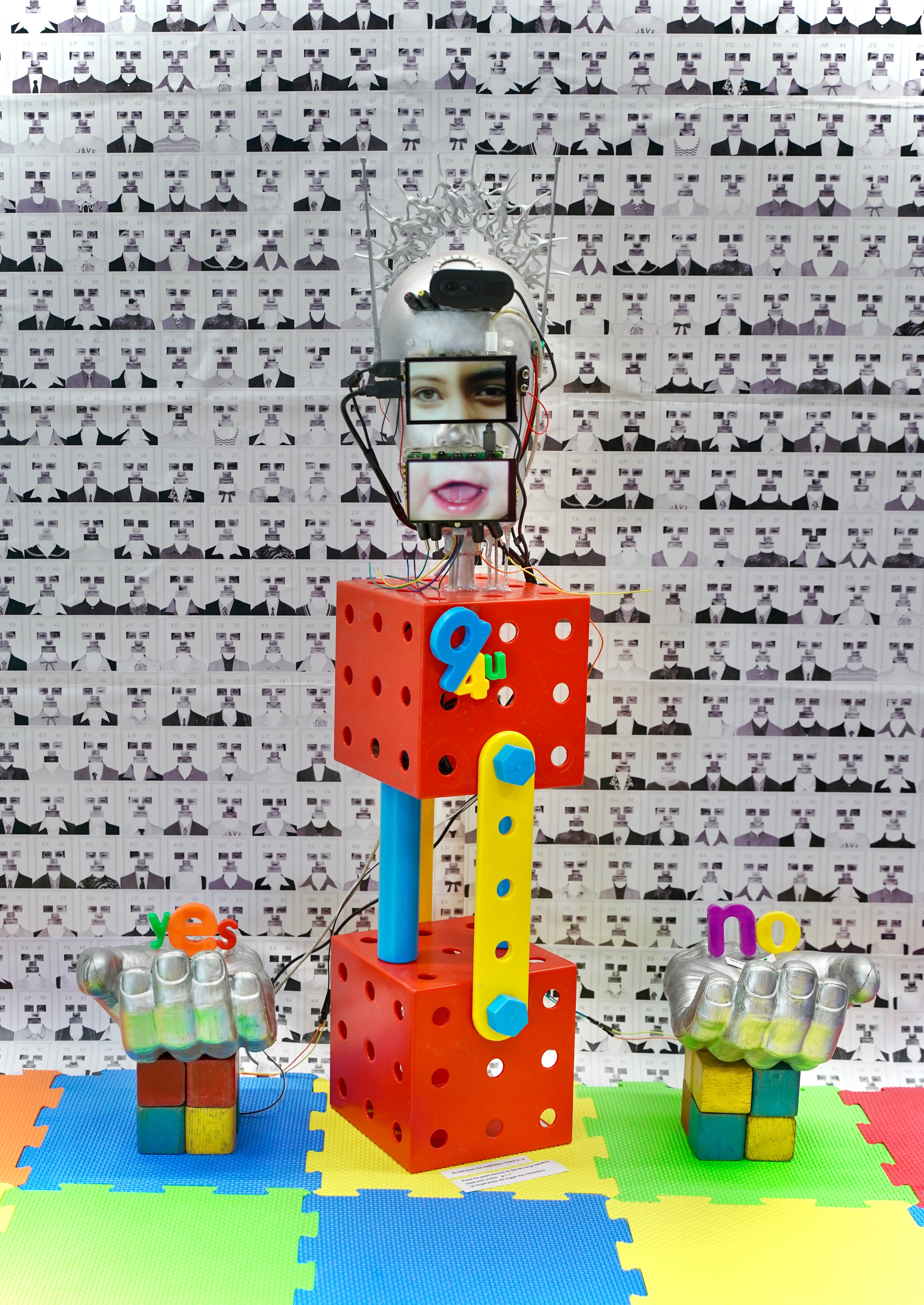

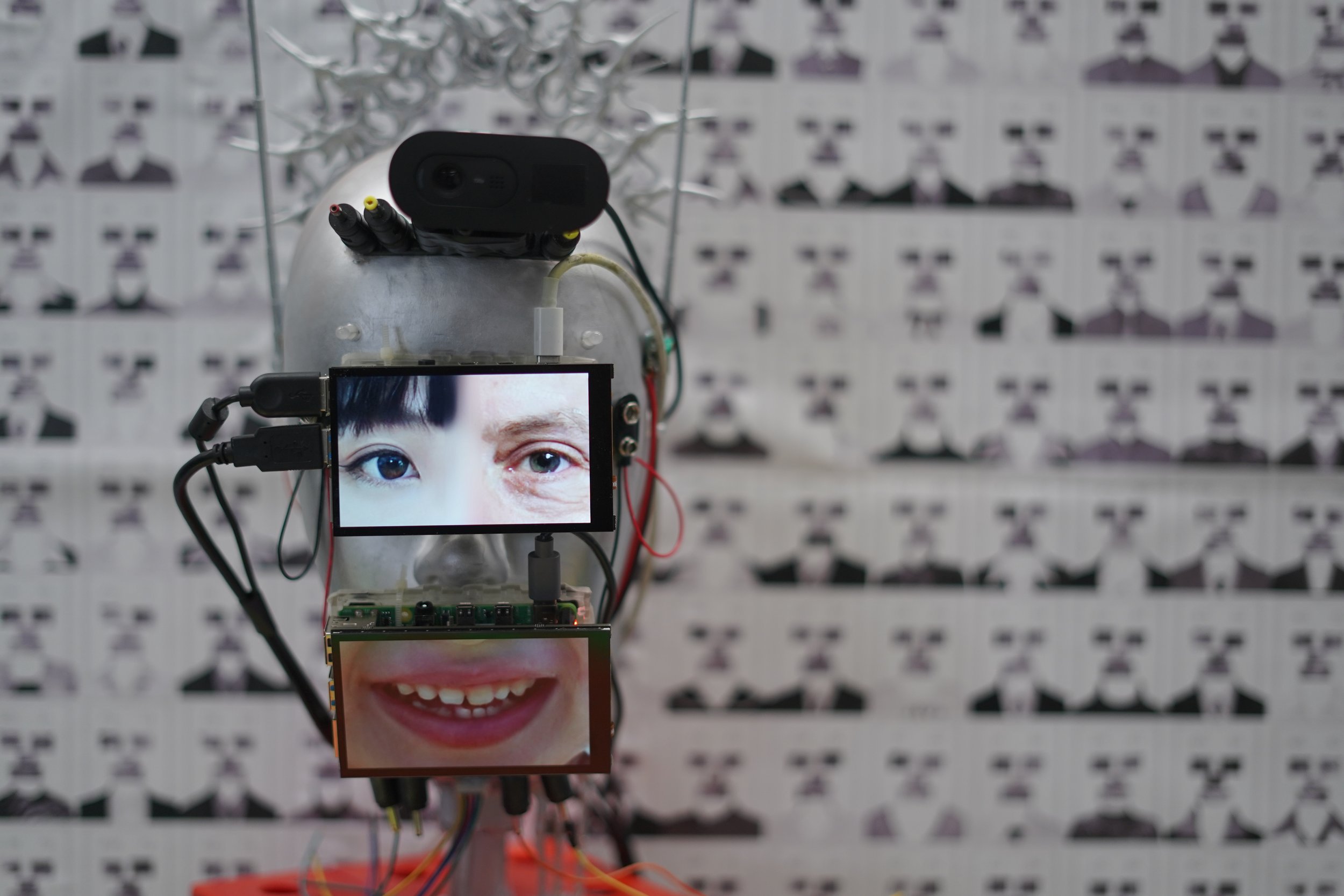

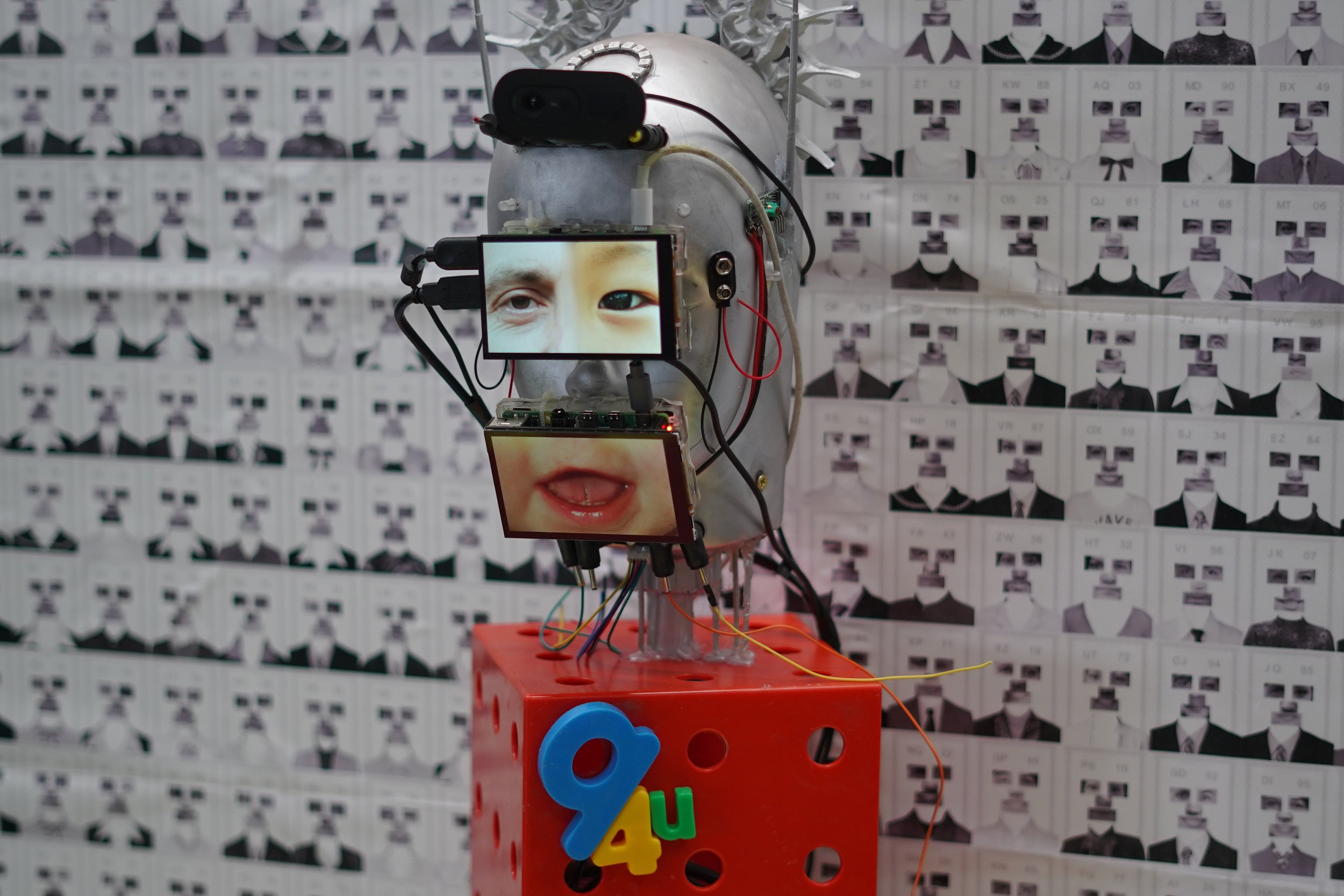

In terms of the use of installation materials,

I chose children's plastic toys combined with digital interactive devices. I hope that the audience will feel like they are playing with the work during the interaction with the installation, which will increase the weirdness and black humor of the work.

After determining the exhibition space, we produced relevant promotional posters and exhibition arrangements based on the concept of our work.

CONTROL: Uncanny Valley Show

CONTROL: Uncanny Valley Show

CONTROL: Uncanny Valley Show

Medium: Screens, Retro toys (plastic), Electronic components

2024

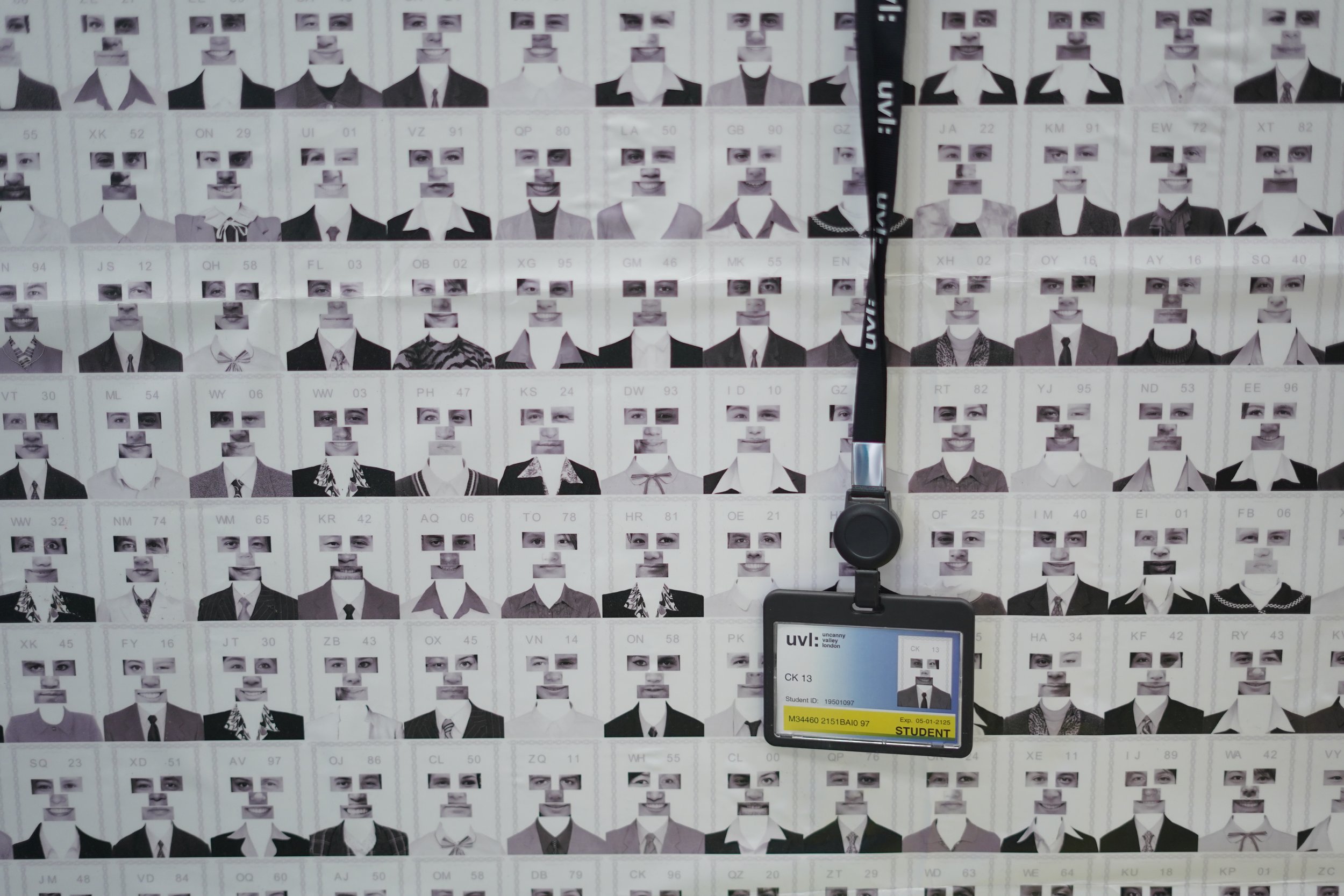

I utilized NFT image generation techniques to deconstruct and recombine AI facial features, producing 5,000 unique Uncanny Valley face photos. These images were intentionally designed to resemble ID card photos, each labeled with random letters and numbers to simulate names.

The exhibition in Copeland Gallery involves 200 fake ID cards branded as UAL's UVL (Uncanny Valley London), distributed among students and employees. This installation aims to critique and satirize the blurring of human self-identity in the AI era and the pervasive infiltration of AI into the real world. Through this work, I highlight the complexities and ambiguities of identity in our increasingly AI-dominated society.

In the final graduation exhibition -----CONTROL: Uncanny Valley Show is the third stage of the UVL series of works., I work with JOJO from CCI to restore the role of an interviewer in the UVL world in the real exhibition. It will interact with the audience as a humanoid interactive art installation, further improving my UVL worldview concept. I use the concept of a toy house to express my views on AI, and the audience can interact with it and make their own choices.

Photos of audience interaction at the exhibition

After the Summer Show, we put this work back into a new exhibition at Espacio Gallery for another exposure.

Research Festival

During the Research Festival, I also updated the original proposal. I plan to make a film completely generated by AI with my classmates Jenny and Anqi who are studying AI together. We will divide the movie into two chapters based on the two specific directions of AI we are studying: AI and the Uncanny Valley Effect and AI and Mysticism. We will also use film to convey our common points about our respective topics and our thoughts on AI.

First of all, in terms of content production, this film will be created entirely by AI and us, whether it is video images, sound design, or the story writing part of the script. We used a total of four AI software to achieve this. In terms of screening format, we hope to break the traditional screening mode and use AI to play the roles of the story separately, so that the protagonists in the two different stories can talk to each other and have a question-and-answer session with the audience. We decided to use the voice function of Chatgpt to achieve this. The plot parts of the two stories run through the works of the three of us in Unit 1 and Unit 2 respectively.

Preliminary testing part of the video

←

We conducted a preliminary test and screening workshop of the video sample in our computational arts department on October 17th. Tutors and year 1 and year 2 students gave us more feedback and suggestions for improvement on the content and form of the video.

The screening workshop is divided into three parts:

Part 1: Film screening

Part 2: AI character conversation

Part 3: Q&A between the audience and the AI characters.

Among them, the question-and-answer part between the audience and the AI characters was their favorite part, but there are still many parts that need to be improved.

October 17th Studio Preview Screening

Photos of the test screening

→

Please click on the logo to watch the video

“Synthetic Prophecy” Version 17.10

AI Ryan Stella Talk Version 17.10

We conducted a recorded rehearsal with Anna at the Peckham Road Theatre, and from this experience, we gathered three key areas of feedback from Anna and the audience to improve the screening and interaction experience for the upcoming research festival.

Include a more detailed introduction to who each artist is, your individual interests, and how you came together.

Explain how you interacted with AI characters and utilised specific tool for image creation

Consider miking the phones to enhance audio clarity in the space.

This rehearsal provided us with valuable insights that will help us refine the presentation further in preparation for the Research Festival.

November 8th Anna’s Feedback

(recorded rehearsal)

Photos from the screening

Exhibition plan of the Research Festival at South London Gallery

After discussion, we have finalised the plan for the research festival and included a mockup image illustrating how we intend to set up the phone in the space.

Introduction and Background Begin with a brief introduction to the artists, highlighting each individual's unique interests and how these perspectives came together to form the collective. We’ll outline our collaborative journey, offering insight into how our backgrounds and shared artistic values shaped the creation of Synthetic Prophecy. We will provide context on the thematic focus and how we explore AI, storytelling, and human-AI interaction using specific tools. This introduction will give the audience a strong foundation to engage with the work on a deeper level.

Screening the film Synthetic Prophecy The screening will showcase the collaborative integration of AI in the creative process, blending storytelling, digital aesthetics, and AI-generated elements to create a thought-provoking experience.

AI Character Dialogue Following the screening, we’ll present a recorded conversation between the two AI protagonists from Synthetic Prophecy, delving into their unique perspectives on AI and the nature of their own identities. This dialogue adds depth to the characters and gives the audience a direct insight into their viewpoints and motivations. The conversation will serve as a bridge between the narrative of Synthetic Prophecy and the larger themes of AI and human agency, inviting the audience to think critically about AI’s role in society.

Real-Time Interaction with the AI Characters We’ll utilise a pre-trained ChatGPT model to enable participants to engage in a Q&A session with the two AI characters. We look forward to seeing how people respond to the film and to exploring broader perspectives on AI within this interactive context.

Technical Setup Considerations Based on Anna’s feedback, we will enhance the technical setup by adding tripods and microphones to the phones. This configuration will improve the audience experience, ensuring clarity in the AI dialogue, as well as in audience questions and responses.

On-site simulation effect diagram

RF24, South London Gallery